Market Insight: AI Bubble Risk And Capital Cycles

Executive Summary

This report examines the formation of the AI capital system, analysing how capital flows, infrastructure build-outs and market expectations may currently outpace verifiable productivity gains and cash flows. It defines the four distinct phases of this economic cycle, tracing the trajectory from the initial investment supercycle and hidden accumulation of structural stress, to visible market fracturing and inevitable systemic rebalancing. The analysis explores critical tension points – such as valuation concentration, the inference utilization gap and the enterprise return on investment (ROI) wall – revealing how current reinforcing loops may trigger a sharp correction in asset prices and capacity. It also highlights strategic playbooks for vendors and buyers, outlining how market participants can transition from narrative-led expansion to evidence-based deployment, to capture durable value in a post-bubble economy.Summary for decision-makers

- The AI market can be viewed in terms of an economic cycle, where capital, infrastructure build-out and expectations currently significantly outpace verified productivity gains and organizational adoption.

- Tension is quietly accumulating via massive infrastructure overbuild (the GPU utilization gap), heavily subsidized application layers, and an ‘ROI wall’ preventing the scaling of enterprise pilots into core production systems.

- A market correction will trigger a capital reset, resulting in the rapid consolidation of software firms, an extinction event for weakly differentiated applications, and a deflationary shift in compute pricing.

- To achieve resilience, vendors must urgently prioritize ‘default alive’ economics, pivot aggressively to owning specific vertical workflows, and architect for model fungibility, to benefit from falling compute costs.

- Enterprise buyers are moving from widespread experimentation to strict capital discipline, de-risking structural dependency on single providers, and demanding explicit outcome-based contracts and clear governance.

- The ecosystem will reorganize around fewer, deeper nodes that demonstrate measurable, repeatable value. The focus will shift from momentum-led hype to evidence-anchored, industrial-grade deployment, governance and trust.

The AI capital system

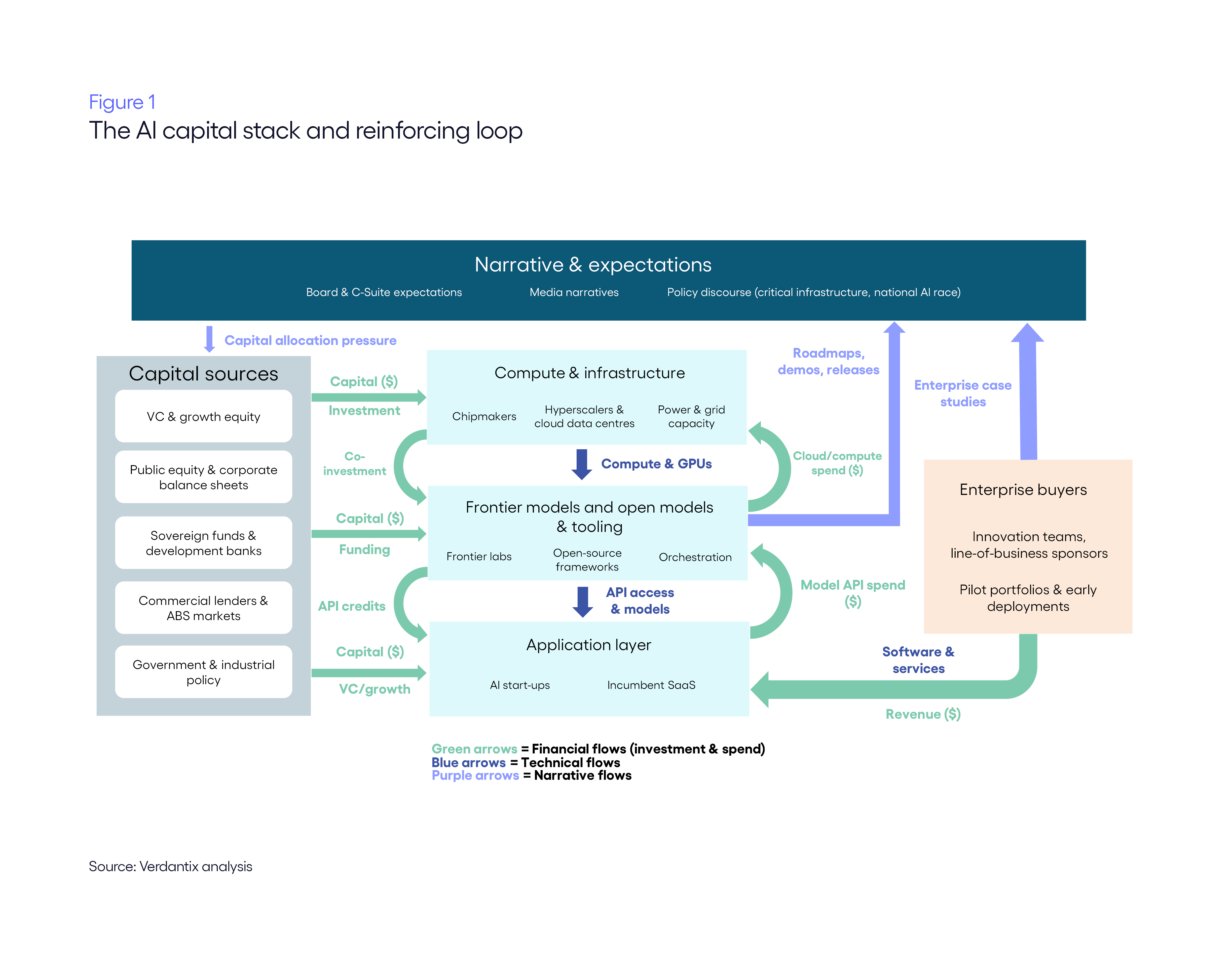

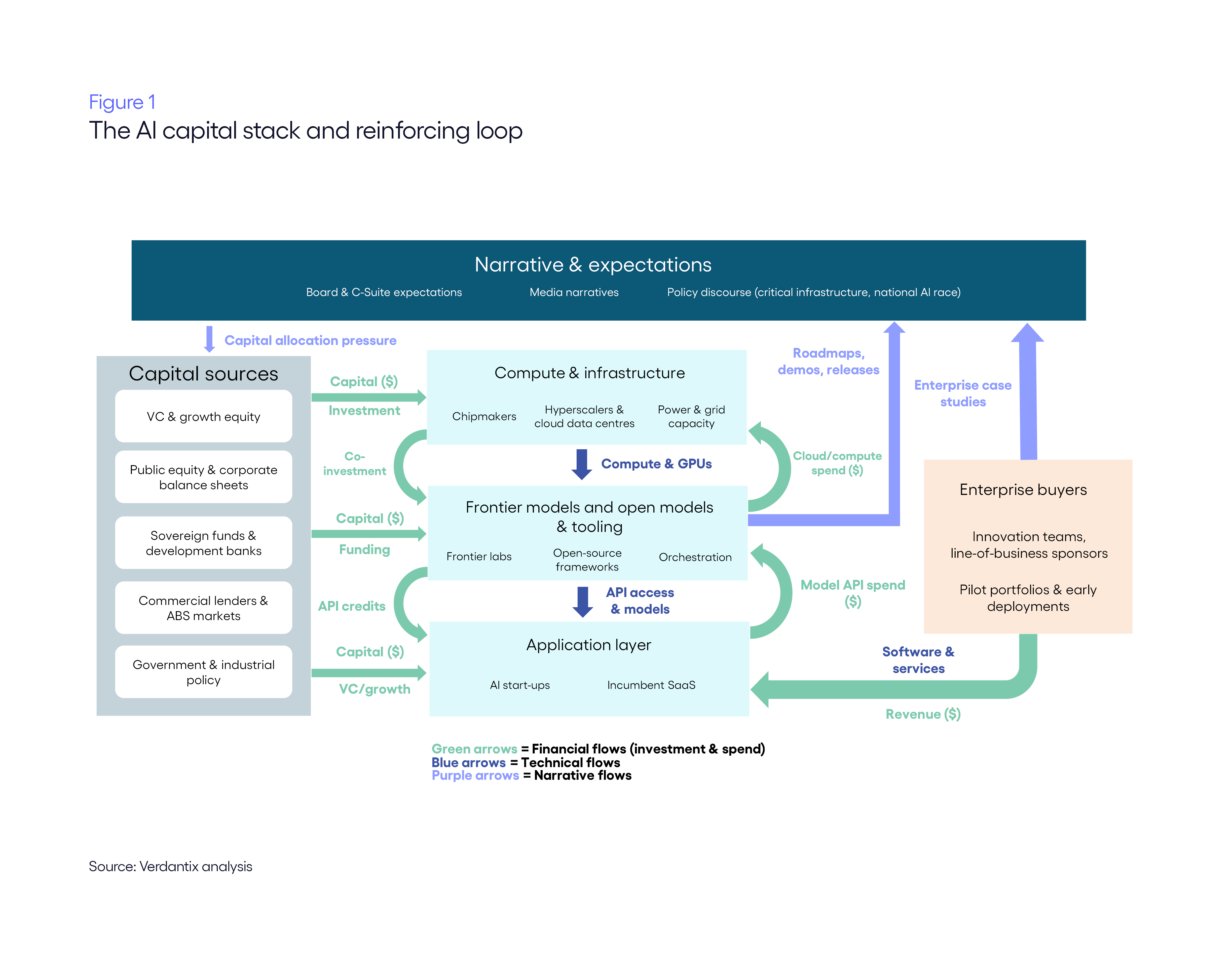

An economic bubble forms when capital, expectations and asset prices rise much faster than the underlying cash flows, productivity gains and adoption that can ultimately justify them. In the current AI cycle, that dynamic is not confined to a single market segment or technology layer. It spans a tightly connected set of actors: hyperscalers and chipmakers building AI-optimized compute; frontier model labs advancing general-purpose intelligence; AI software providers targeting high-value domains; enterprise buyers running pilots and early deployments; and the capital and policy system around all of these – comprising venture capital (VC), private equity, lenders, governments and regulators. Capital moves through this entire stack in coordinated waves, linking infrastructure, software, policy and finance in a single, interdependent market system.

At the core of this system is a powerful reinforcing pattern, in which capital funds new capacity, capacity strengthens the perception of AI inevitability, and that perception attracts still more capital (see Figure 1). Expectations can accelerate faster than real organizational adoption: infrastructure and funding can scale in months, while workflow redesign, governance and return on investment (ROI) validation unfold over years. As scale builds, perceived inevitability hardens – boards, investors and policymakers increasingly treat AI not as an optional technology cycle, but as critical national and corporate infrastructure. Whether this ultimately resolves into a durable deployment-led economy or a sharp correction in valuations and capacity depends on how quickly real value capture, utilization and financial discipline converge with the speed of capital formation.

Phase 1: The investment supercycle

The first phase of the current AI cycle is defined by how quickly capital has moved from experimentation to system-building. What began as aggressive venture funding has expanded into a multi-layered investment engine spanning equity markets, infrastructure finance, public-sector funding and corporate balance sheets. Money is not just backing individual products or start-ups, but shaping the physical, organizational and financial foundations on which future AI use will depend. Compute, data centres, semiconductors, developer tooling, vertical platforms and national capacity programmes are all being scaled in parallel. This phase matters, because early capital allocation decisions establish control points, dependencies and cost structures that persist long after the initial excitement fades. Decisions around who gets funded, what infrastructure is built, which tools become standard and which vendors are embedded will strongly influence how flexible, competitive and resilient the AI ecosystem proves to be in the next stages of its evolution. The key elements of this market development consist of:

- VCs, sovereign funds & corporates funnelling mega-rounds into frontier model labs and aligned hyperscalers.

In 2025, capital at the very top of the stack concentrates around a small set of labs. OpenAI raised $40 billion at a $300 billion post-money valuation to fund model R&D and global compute build-out. Anthropic followed with a $13 billion Series F at a $183 billion valuation, led by ICONIQ and large asset managers. In Europe, Mistral secured a $2 billion Series C led by ASML at a $13.7 billion post-money valuation. These rounds are often paired with long-dated cloud and graphics processing units (GPU) commitments, plus joint infrastructure projects such as the multi-hundred-billion ‘Stargate’ data centre initiative involving OpenAI, Oracle and SoftBank (see Verdantix Market Trends: LLMs And AI Cloud Services). The effect is to embed a small group of labs and infrastructure partners as decision hubs, setting de facto standards on model roadmaps, safety posture and compute pricing, while everyone else – from start-ups to states – increasingly becomes a price-taker on capacity and roadmap access.

- Hyperscalers & chipmakers reinvesting earnings & bond proceeds into AI data centres and accelerator supply.

Cloud giants use current AI revenue, plus bond markets, to front-load a massive build-out of capacity. Between 2015 and 2025, Amazon, Google, Meta and Microsoft will have spent about $315 billion on data centre capital expenditure (CAPEX). On the silicon side, TSMC lifted CAPEX to $29.76 billion in 2024 and is targeting $38 billion to $42 billion in 2025, with around 70% aimed at the advanced 3nm and 2nm processes that underpin GPUs and AI accelerators. Banks are now structuring multi-billion project loans, such as a proposed $38 billion package to fund new Oracle/Vantage sites for OpenAI workloads. Once this hardware, power and grid capacity is committed, it locks in expectations of very high AI workload growth and makes the eventual adjustment much slower and more politically sensitive.

- VCs and strategic investors backing open-source communities and orchestration/tooling vendors.

Below the model layer, capital fans out into the toolchains that make agents usable (see Verdantix Market Trends: Enterprise AI Agent Adoption). LangChain, the dominant open-source framework for building agents, raised $125 million in 2025 at a $1.25 billion valuation to “build the platform for agent engineering”, backed by IVP, Sequoia Capital and others. CrewAI, which maintains an open multi-agent framework plus an enterprise edition, has raised about $18 million across inception and Series A rounds and reports more than 10 million agents deployed on its platform (see Verdantix Agentic AI Essentials Glossary). Similar funding is flowing into evaluation, monitoring and safety tooling. These cheques are small compared with those for frontier labs, but they decide which application programming interfaces (APIs), execution patterns and best practices developers standardize on. Once enough teams are trained on a specific framework, and ecosystems form around it, that tooling layer quietly shapes how tasks are broken down, how agents call tools, and how performance is measured – choices that are difficult to unwind later.

- Enterprise innovation budgets & growth equity capital flowing into vertical AI providers with strong ROI stories.

Domain specialists turn AI into line-of-business propositions. In healthcare, Abridge raised $300 million in a 2025 Series E at a $5.3 billion valuation for ambient clinical documentation, which is now rolled out across major US health systems. Legal AI start-up Harvey closed a $150 million round at an $8 billion valuation – its third raise in 2025 – on the promise of transforming law firm workflows. In customer experience, Parloa secured $120 million in Series C funding at a $1 billion valuation to scale its agentic AI platform for enterprise contact centres. In each case, customers are not just buying software – they are entering multi-year commitments, co-funding roadmap features and handing over privileged data. That behaviour sets expectations inside organizations about where AI ‘belongs’, how returns are quantified and which vendors become embedded gatekeepers for domain-specific data and processes.

- Incumbent software platforms deploying balance sheets to acquire AI-native start-ups.

Public-market software giants are converting their market caps and cashflows into acquired AI capabilities. ServiceNow agreed to buy Moveworks, an AI assistant platform for employee support, for $2.85 billion in cash and stock – its largest deal to date – to embed autonomous agents more deeply into information technology service management (ITSM) and employee workflows. Workday signed and then completed a roughly $1.1 billion acquisition of Swedish AI firm Sana, folding its search, agents and learning tools into Workday’s human resources (HR) and finance cloud. These moves create a feedback loop: high public valuations provide ‘expensive’ stock to spend, which validates high private valuations for AI start-ups aiming to be acquired, which in turn encourages more company formation and capital allocation. Strategically, they also decide whether AI shows up as a separate platform or disappears into existing suites, which matters for future pricing power and customer flexibility.

- Governments, development banks and commercial lenders financing fabs, data centres and public compute.

States and quasi-public financiers are underwriting the heaviest infrastructure. In the US, the CHIPS and Science Act authorizes some $280 billion, including $52.7 billion in direct subsidies and tax credits, for domestic semiconductor manufacturing and R&D. The EU is rolling out ‘AI Factories’ tied to the EuroHPC supercomputing network – 19 factories and 13 ‘antennas’ that give start-ups and small and medium-sized enterprises (SMEs) access to high-end compute – alongside plans for a network of AI ‘gigafactories’. The UK has committed £300 million to its AI Research Resource cluster and published a long-term Compute Roadmap to expand national capacity. EuroHPC has also channelled grants, such as €61.6 million to Axelera AI for an inference chip for data centres. Once these programmes are in motion, they effectively guarantee that large pools of capital – and political capital – are tied to sustaining abundant AI compute, even if private demand later cools.

Phase 2: Building mechanics – and where stress builds

Phase 1 is dominated by reinforcement: capital, capacity and expectations rising together. Phase 2 is where the internal mechanics of the system begin to matter. The key dynamic is no longer how fast money and infrastructure can scale, but how unevenly that scale is absorbed by real demand, power, governance and organizational capacity. Stress does not yet show up as outright failure; instead, it accumulates quietly in balance sheets, utilization rates, pricing structures, unit economics and dependency patterns. The mechanisms do not imply a turning point on their own. They describe where structural tension builds, how the system becomes more sensitive to shocks, and which layers are most exposed if sentiment or utilization shifts. The six key mechanics are:

- Valuation and dependency concentration at a few infrastructure and model hubs.

Capital, revenue and technical dependency are clustering around a small group of chipmakers, hyperscalers and model labs. In 2025, AI start-ups attracted about $89 billion of VC funding – roughly 34% of all global venture funding, despite representing only 18% of funded companies. Other estimates suggest that around a third of US and European VC since 2020 has gone into AI, with recent quarters dominated by megadeals for frontier labs and AI infrastructure. These same hubs now anchor equity indices and trade on elevated earnings multiples that already price in many years of high growth, leaving limited room for disappointment if utilization, pricing power or regulation bite. Central banks such as the Bank of England have started to flag AI-linked asset prices and concentration as a potential financial stability vulnerability. Structurally, the system depends on a few nodes continuing to meet very demanding expectations.

- Infrastructure overbuild and the inference utilization gap.

On the physical side, data centre and chip capacity are being built faster than the ability of power systems and monetizable workloads to catch up. Global data centre equipment and infrastructure spend reached about $290 billion in 2024, with forecasts of a $1 trillion market by 2030, largely driven by AI workloads. Yet in Santa Clara – NVIDIA’s backyard – two new facilities totalling nearly 100MW of IT load are standing empty because they cannot yet secure enough grid power – and may sit under-utilized for years. Hyperscaler leaders have also acknowledged racks of high-end GPUs ‘sitting in inventory’ or powered off due to power and data hall constraints, with reports of GPUs ‘going dark’ in multiple regions. At the same time, digital infrastructure asset-backed securities have grown eightfold in under five years, with data centres backing around 60% to 65% of that segment – a pattern reminiscent of the long-haul fibre network build-out during the dotcom boom, when capacity arrived years ahead of sustainable demand. The structural tension here is clear: CAPEX and debt commitments assume high, sustained AI utilization, but power constraints and slower-than-expected enterprise adoption create an inference utilization gap, where expensive capacity is only partially earning its keep.

- Subsidized usage and fragile application-layer unit economics.

At the application edge, a large slice of today’s AI activity sits on top of subsidies, rather than robust, proven willingness to pay. Amazon has pledged $230 million in Amazon Web Services (AWS) credits for generative AI (GenAI) start-ups, with accelerator participants eligible for up to $1 million each, and notes that rivals such as Google and Microsoft run similar programmes. These credits stack with discounted foundation-model pricing, free tiers and ‘all-you-can-eat’ per-seat plans. Meanwhile, long context windows, multi-step agents and heavy tool use multiply token consumption behind the scenes. A distinct pattern has emerged: VC funding flows into lightweight applications whose primary differentiation is user experience (UX), workflow integration and branding on top of third-party models and APIs. While subsidies are abundant, these products can show fast usage growth and attractive headline annual recurring revenue (ARR), despite carrying high variable costs and having little moat. Structurally, this creates a wedge between apparent traction and sustainable economics. Once credits expire and true cost-to-serve is exposed, many of these wrappers will struggle to raise prices or cut usage without breaking their own growth story – leaving them structurally exposed if funding or demand conditions tighten.

- The enterprise ROI wall – and pilot-to-production breakdown.

On the demand side, many organizations are running into an ROI wall. Adoption metrics are high, but measured impact is often weak. A 2025 Massachusetts Institute of Technology (MIT) study of enterprise GenAI deployments found that around 95% of pilots produced no measurable profit and loss (P&L) impact, with only a small minority generating meaningful, repeatable value. The failures were not primarily due to model quality, but stemmed from poor workflow integration, generic tools bolted onto processes and unclear ownership. In practice, firms accumulate large portfolios of pilots funded from innovation budgets; however, only a small fraction are hardened, integrated and scaled into core systems. Structurally, that means that cash outflows and internal attention build faster than durable productivity gains. As this backlog of ‘interesting but unproven’ projects grows, budget decisions become driven by narrative and peer comparison effects, rather than clear unit economics, increasing the gap between expectations and realized returns.

- Circular capital flows and leveraged balance sheets across the stack.

Another source of stress is how money cycles through the ecosystem, and the question of who ultimately holds the downside. Cloud platforms, chipmakers and labs are increasingly intertwined as investors, suppliers and customers. Amazon’s partnership with Anthropic combines up to $8 billion of equity and commercial commitments and makes AWS the primary training partner for Anthropic’s flagship models. Microsoft’s revised agreement with OpenAI includes a commitment from OpenAI to purchase $250 billion of Azure services over several years, alongside long-term rights to sell OpenAI models. New three-way deals now see Anthropic pledging $30 billion of Azure spend, while Microsoft and NVIDIA each invest billions in Anthropic and jointly expand GPU capacity. Underneath this, data centre expansion is increasingly debt-financed, with investment-grade bonds, private credit and alternative business structures (ABSs) funding multi-billion campuses. The structure pushes apparent AI revenue up, while shifting risk onto lenders, late-stage investors and infrastructure owners if end-user demand under-delivers.

- Model commoditization and erosion of the frontier scarcity premium.

There is a structural question about whether frontier model performance and scarcity can sustain today’s pricing power. Much of the investment logic assumes that a small set of proprietary, ultra-large models will remain clearly superior to cheaper or open alternatives, justifying premium pricing and high infrastructure utilization. The DeepSeek episode in January 2025 exposed how fragile that assumption can be. DeepSeek, a Chinese start-up, claimed to have trained its V3 model for under $6 million using NVIDIA’s lower-tier H800 chips, and released an R1 reasoning model that it said was 20 to 50 times cheaper to use than OpenAI’s o1 on many tasks (see Verdantix Why DeepSeek’s R1 Is Actually Good News For Enterprises Everywhere). That announcement triggered a near-instant repricing across AI-linked equities, with NVIDIA alone losing around $600 billion in market value in a single day, before partially recovering. In structural terms, the mechanism is clear: if ‘good enough’ low-cost or open models proliferate, the scarcity premium embedded in frontier lab valuations and the most power-hungry infrastructure bets comes under sustained pressure.

Phase 3: When stress turns visible

Phase 3 is where the tensions that accumulated quietly in earlier phases begin to surface in observable ways. What had previously been visible mainly in balance sheets, utilization assumptions, contract structures and unit economics now appears in reported earnings, infrastructure utilization, credit conditions and buyer behaviour. No single signal defines the transition. Instead, risk rises when multiple indicators move together, suggesting that earlier reinforcing loops are weakening and pressure is starting to travel across connected parts of the system. This phase is therefore less about prediction and more about detection: identifying when internal stresses are no longer being absorbed smoothly and are beginning to influence decisions across capital, infrastructure and demand. Key signals of stress are:

- Balance sheet strain and underwhelming earnings at infrastructure and model leaders.

The earliest visible stress appears in the quarterly results of chipmakers, hyperscalers and flagship AI partners. Growth remains strong in absolute terms, but cloud, data centre or AI segments increasingly miss or only just meet analyst expectations, while backlog growth softens and AI CAPEX guidance is subtly trimmed or pushed out. Earnings calls shift tone from ‘unconstrained AI demand’ to ‘disciplined investment’ and ‘prioritizing utilization of existing clusters’. Markets respond sharply: stocks that once rallied on any AI headline now sell off on results days when AI revenue underperforms consensus. This reflects the system’s sensitivity to even small deviations from the supercycle narrative. Pressure at a handful of central nodes begins to flow outwards into suppliers, hiring plans and valuation multiples, signalling that expectations are being tested in real time, rather than carried forward unquestioned.

- GPU and data centre overhang becoming visible.

The inference utilization gap becomes a live issue, rather than an implied one. Providers begin acknowledging lower-than-planned GPU utilization, consolidate internal workloads and slow the rollout of new clusters. Cloud platforms cut prices on GPU instances, deepen long-term reservation discounts and bundle accelerators more aggressively to stimulate demand. Data centre projects announced during peak enthusiasm shift to phased delivery, are delayed awaiting power or workload clarity, or are repurposed as more general compute. Utilization metrics begin to drift downwards, undermining the investment cases built in Phase 1. Here, pressure travels from physical assets into financial performance: under-used capacity weakens returns, which dampens appetite for further expansion and feeds back into more cautious supplier orders. The focus shifts from building capacity to sweating existing assets, marking a clear departure from the prior expansion loop.

- Cloud and model contract downsizing by key customers.

Contract structures start to reflect more sober demand expectations. Large enterprises return to renegotiate AI-related cloud and model commitments, seeking lower minimums, shorter durations, more break clauses and greater reliance on usage-based pricing. New deals include tighter ramp schedules and explicit ROI metrics, rather than headline growth commitments. On quarterly calls, providers increasingly reference ‘customers optimizing spend’ or slowing expansions of previously announced AI projects. This is the demand side pushing back: commitment levels signed in the supercycle no longer match realized utilization or budget thresholds. Pressure moves from under-utilized infrastructure (Phase 2) into revenue visibility (Phase 3), reducing backlog quality and making growth more dependent on demonstrated value, rather than assumed adoption. The feedback loop that once amplified supplier power begins to invert, as buyers reassert control over volume, timing and price.

- Rising mortality, downrounds and distressed M&A in AI software.

In the application layer, stress shows up first in the most fragile business models. Funding rounds shift towards flat and down valuations, with more firms relying on bridge rounds to extend runway. Lightweight and weakly differentiated applications quietly shut down, cap free tiers or remove compute-heavy features, as variable costs exceed revenue. ‘Strategic reviews’ produce acqui-hires and asset sales, where teams and models are absorbed into larger platforms at modest prices relative to prior valuations. This phase exposes how much of the application ecosystem depended on temporary subsidies and easy capital. The system begins reallocating resources: talent and intellectual property (IP) migrate to better-capitalized incumbents and vertically focused vendors, while weaker players exit. These failures, taken together, reflect pressure spreading from infrastructure economics and buyer discipline into operating costs and margins at the application frontier.

- Credit tightening and debt stress in AI-heavy infrastructure.

Tension becomes visible in lending markets. New data centre, training cluster and fabrication facilities (fab) projects face higher spreads, stricter covenants, more conservative leverage limits and longer approval cycles. Single-tenant AI sites attract closer scrutiny from credit committees around utilization risk and long-term renewal prospects. Existing projects encounter tougher refinancing conditions as maturities approach, while ratings agencies flag sensitivity to slower AI adoption, lower pricing or delayed power grid upgrades. In some cases, sponsors sell equity stakes or restructure debt to avoid covenant breaches. This is pressure flowing from earnings and utilization into the cost and availability of capital. Debt, which amplified the Phase 1 build-out, now disciplines supply, curbing marginal projects and reducing the momentum behind large-scale expansion. Financing stops being an accelerant and becomes a constraint.

- Enterprise AI budget freezes, pilot cancellations and narrative shift.

Inside enterprises, leadership behaviour shifts in visible ways. CFO-led reviews freeze new AI initiatives outside of a narrow set of proven, high-return use cases; pilot portfolios are pared back; and projects lacking clear ownership or measurable impact are cancelled, rather than extended. New proposals face stricter payback thresholds, compete with alternative technology investments, and must present hard performance metrics such as cost per task, error rates or throughput improvements. At the same time, leadership language changes from broad transformation narratives to selective, ROI-driven deployment. AI remains critical, but is no longer exempt from normal investment discipline. Demand-side pressure thus closes the loop: as enthusiasm yields to scrutiny, usage growth slows, impairing revenue assumptions, which in turn feeds back into more cautious supply-side strategies.

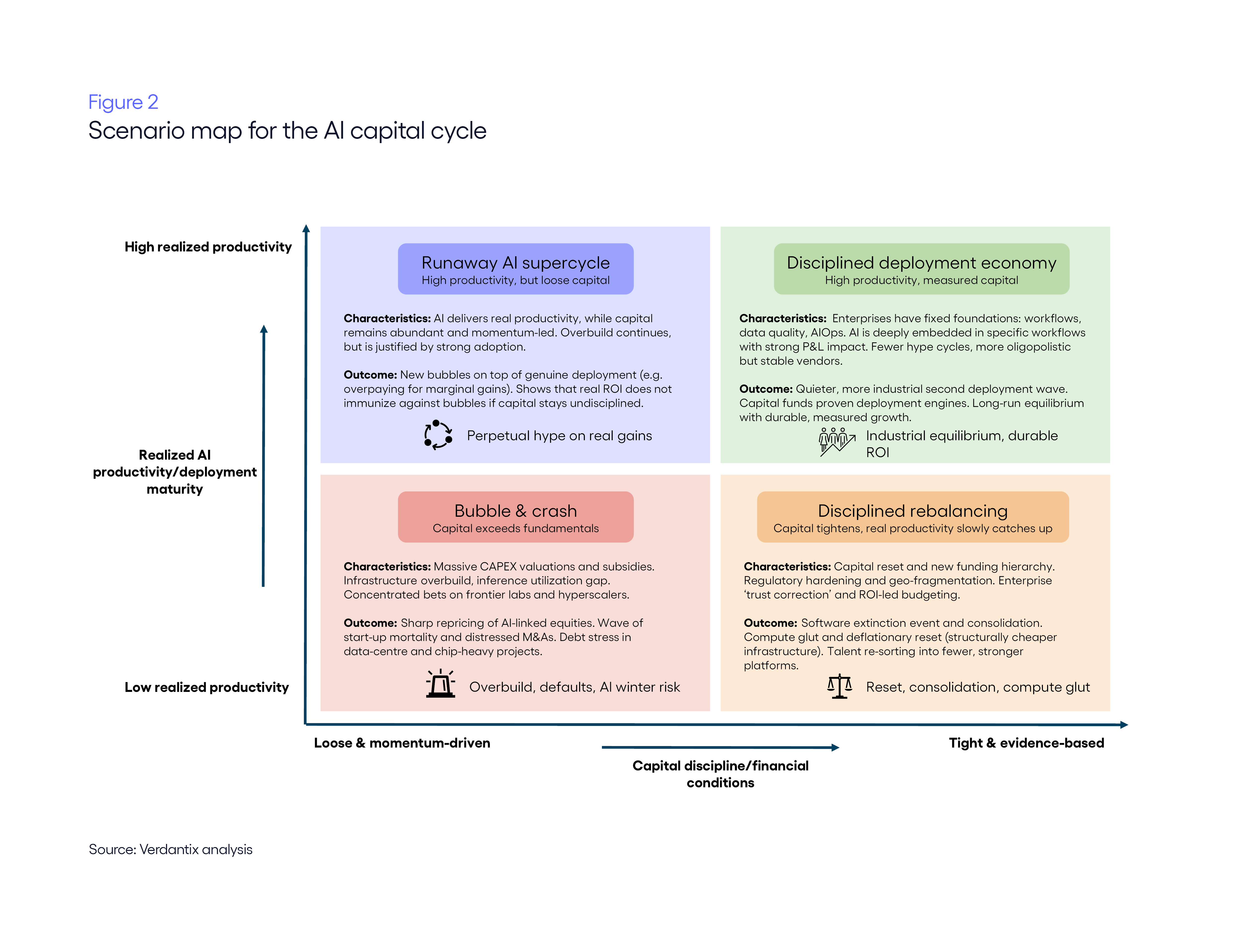

Phase 4: System rebalancing in a correction scenario

If a pronounced correction occurs and the accumulated pressures resolve, the system does not collapse – it reorganizes. The same network that amplified the boom reconfigures around new constraints, power centres and economic realities. In this scenario, momentum-driven growth gives way to a more durable, evidence-based equilibrium. This section describes one possible correction path if current reinforcing loops weaken faster than real adoption, and ROI cannot catch up (see Figure 2). A softer landing is possible if discipline, utilization and governance improve earlier in the cycle. In a correction scenario, we tend to see:

- Capital reset and a new funding hierarchy.

A broad repricing of risk establishes a new capital architecture. Valuations in hyperscalers, chipmakers and public AI plays settle at lower, more conservative multiples, reflecting realistic utilization, pricing and margin expectations. Late-stage AI funds face a thin exit market, locking in weak returns for peak-vintage vehicles and creating a prolonged funding cliff from Series A upwards. Capital rotates towards vendors with defensible economics: vertical AI providers grounded in proprietary data, operational know-how and measurable productivity gains. Boardrooms and limited partnerships (LPs) favour slower, evidence-based scaling, rather than pure land-grab strategies. The system’s investment pathways change: instead of capital pooling in frontier labs and infrastructure megaprojects, funding disperses across narrower, more clearly validated problems. The hierarchy resets around players that can survive without subsidy, deliver stable unit economics and anchor the next deployment cycle.

- Software extinction event and consolidation of capabilities.

The application layer undergoes a structural contraction. Many lightweight and weakly differentiated AI tools are eliminated or absorbed, unable to support themselves once credits, discounts and narrative-driven demand recede. Their teams, datasets and connectors are acquired by stronger platforms via acqui-hires or distressed asset purchases. What remains is a smaller but more robust software ecosystem, comprising a handful of integrated suites that combine workflow, data and agentic capabilities in coherent stacks, and a select group of vertical specialists solving tightly scoped, high-value problems. The overall system becomes less fragmented and more stable, with duplication and superficial innovation reduced. Capabilities consolidate into fewer, deeper nodes that own key interfaces between data, process and AI-enabled decision-making. The ecosystem thus contracts in breadth, but grows in depth.

- Compute glut and deflationary infrastructure reset.

The infrastructure layer absorbs the sharpest correction, but also lays the foundation for the next wave. Overbuilt GPU fleets and AI-optimized data centres become structurally under-utilized relative to their original business cases, making older accelerators economically obsolete ahead of depreciation schedules. Providers mark down assets, expand secondary markets for chips and shift pricing towards simpler, lower-margin models to keep utilization steady. In parallel, surplus capacity finds new anchor workloads, such as simulation, synthetic data generation, scientific computing, industrial optimization and sovereign AI programmes. As equilibrium forms, the net effect is structurally cheaper compute, at far below peak-cycle pricing. While painful for operators and investors, this reset lowers barriers for high-volume public-sector, industrial and research use cases that were previously uneconomic, enabling a more diversified and stable base of demand.

- Talent re-sorting and organizational restructuring.

Labour markets bifurcate and then stabilize around new realities. Jobs tied to speculative applications – such as generic ‘prompt engineers’, thin-layer product roles and lightly differentiating AI tooling positions – face contraction as their employers restructure or vanish. Meanwhile, demand for deep technical skills in model training, systems optimization, secure deployment and data engineering remains structurally high. Talent migrates from failed ventures into stronger platforms, cloud providers and industrial adopters, concentrating expertise within fewer, better-capitalized firms. Inside organizations, AI teams reorganize, with diffuse ‘innovation pods’ shrinking, and high-skill roles becoming embedded within line-of-business units to drive measurable outcomes, instead of experiments. The system emerges with a smaller but more capable talent base, better aligned with long-term value creation, rather than short-lived thematic cycles.

- Regulatory hardening and geo-fragmented architectures.

The post-bubble environment brings regulatory clarity and sharper regional divergence. The supervision of AI-heavy infrastructure lending becomes standardized, while disclosure, provenance and safety requirements rise, and liability chains for model-driven failures are formalized. Global fragmentation hardens as jurisdictions adopt different risk tolerances and compliance regimes. Vendors respond by operating region-specific architectures – separate model variants, data boundaries, logging regimes and deployment patterns – rather than assuming a single global stack. Governments treat compute and foundational models as strategic assets, sometimes maintaining sovereign capacity or mandating local control over sensitive AI services. Compliance burdens increase, but systemic risk falls, as safeguards and clear operating boundaries replace the permissiveness of the boom.

- Trust correction, ‘AI winter’ in capital, and enterprise ROI reset.

In a sharper correction, enterprises and users adopt AI more deliberately. Tolerance for low-quality synthetic output declines, and provenance, guardrails and human oversight become an expectation. Budgets rotate from exploratory copilots to a smaller set of agentic systems with proven, repeatable ROI – a shift exemplified by Verdantix data, which show expectations for double-digit budget growth for AI-centric projects plummeting from 72% in 2024 to just 20% in 2025 (see Verdantix Global Corporate Survey 2024: Artificial Intelligence Budgets, Priorities And Tech Preferences and Verdantix Global Corporate Survey 2025: AI Budgets, Priorities And Tech Preferences). Projects that deliver measurable improvements in throughput, safety or cost efficiency continue to expand, while others are retired. In parallel, capital goes through its own winter. LPs and investment committees that were burned on peak-cycle AI funds become more cautious across the board, tightening standards not only for AI, but for other frontier narratives. Funding shifts from momentum-led thematics to evidence-led theses, with a higher bar for differentiation and path-to-profitability. In this scenario, the system moves from hype-driven diffusion to value-anchored reinforcement, with solutions that can demonstrate consistent impact attracting more data, talent and budget, and serving as the foundation of a quieter, more industrial second deployment wave.

How market participants adapt

As the AI market moves from rapid expansion towards greater discipline, outcomes are no longer shaped only by macro forces such as capital cycles, regulation and infrastructure repricing, but increasingly, by how vendors and buyers adjust their behaviour within those constraints. Each side acts as a control surface on the system: vendor choices influence the supply, pricing and risk profile of AI solutions, while buyer choices determine which applications compound into durable deployment and which fade out.

The sections that follow do not assume a single outcome, whether a sharp correction or a softer landing. Instead, they outline practical moves that improve resilience in either scenario. For vendors, the focus is on tightening the link between innovation, unit economics and trust. For buyers, the emphasis is on regaining control over capital allocation, dependency risk and operational quality. Together, these responses will shape how quickly the market shifts from narrative-led expansion to value-anchored deployment.

Vendor playbook for a volatile AI cycle

The rebalancing phase does not treat vendors as passengers. While macro forces – capital cycles, regulation and infrastructure repricing – sit outside any single firm’s control, vendors still have meaningful leverage over how they absorb the shock and where they land in the new equilibrium. The question is not ‘how to avoid the bubble pop’, but how to redesign their position in the system once easy capital, subsidy-driven usage and narrative-led purchasing fade. Vendors should:

- Hit ‘default alive’ economics fast – while maintaining real innovation.

Vendors need to behave as if the subsidy phase is already over, while still investing in the few things that can bend their economics. That means driving burn multiple towards the 1x to 1.5x range; instrumenting cost-to-serve at feature, customer and workflow level; and exiting segments where margins never converge. The answer is not ‘no experiments’, but fewer, better experiments that are co-funded by customers, anchored in real workflows and designed with a clear path to scalable ARR within six to 12 months. Roadmaps should reserve capacity for these customer-backed bets, while stripping out vanity features and marketing-led prototypes. Structurally, firms that reach ‘default alive’ while still compounding their product advantage will be least exposed to capital market mood swings and can keep innovating through the downturn – as weaker, subsidy-dependent peers are forced into distressed sales or shutdowns.

- Go vertical and own specific workflows end to end.

General-purpose copilots that nibble at 1% to 5% of knowledge work will be easy to decommission in a budget crunch. Survivors will be those that own 25% to 50% of a clearly defined workflow in a specific domain, such as claims adjudication, drilling optimization, accounts payable (AP) automation, contract review or clinical documentation. That requires access to ‘dark matter’ data – logs, edge telemetry and idiosyncratic documents – and the ability to execute the full loop from ingestion to decision and outcome. Innovation will shift from launching new generic features to deepening automation of the same workflow, by expanding coverage, handling edge cases, integrating more systems of record, tightening controls and raising autonomy where governance allows. Rather than selling a model, vendors will sell resolved cases, clean ledgers, processed invoices and closed tickets. In system terms, the vertical focus tightens the link between experimentation, usage and measurable productivity gains, making the vendor much harder to dislodge during post-bubble consolidation.

- Fix pricing around outcome, or integrated subscription.

Seat-based pricing for ‘copilots’ misaligns incentives. Vendors should instead anchor stock-keeping units (SKUs) in autonomy and attribution, adopting one of two models: metering for specific outcomes (per resolution, per document); or folding AI into the overall subscription as a core value driver. The first links spend to results; the second treats AI as standard infrastructure, rather than a luxury add-on. Both strategies avoid the friction of customers feeling ‘taxed’ for efficiency. Crucially, vendors must anticipate a pricing reset as model costs fall. Building tariff structures now that can flex with infrastructure deflation – while preserving margin via efficiency – protects the ability to innovate without constantly re-opening painful pricing conversations.

- Optimize the compute stack and design for model fungibility – to fund more R&D, not less.

The same forces that inflated the bubble – frontier scarcity and hyperscaler lock-in – will drive sharp repricing of models and GPUs as capacity overshoots demand. Vendors should assume that foundation models will become interchangeable utilities, and architect to benefit from that shift, rather than be crushed by it. Concretely, they should push as much workload as possible to cheaper small and specialized language models fine-tuned on domain data; constrain context to what actually moves accuracy; prune retries and tool calls; and use evaluation harnesses to route tasks to the lowest-cost ‘good enough’ option. Abstraction layers should make the swapping of providers routine, as prices, performance and regulation evolve, turning model deflation into a tailwind. Done well, every step in large language model (LLM) repricing improves gross margin and funds more product R&D – rather than forcing emergency repricing or painful cuts when contracts and architecture are misaligned with the new economics.

- Compete on trust, assurance and vendor viability.

As the hype recedes, buyers shift focus from feature novelty to counterparty risk, scrutinizing whether a vendor has the resilience to serve as a long-term partner. To pass this test, vendors must make observability, auditability and control first-class features, proving that the product is as stable as the business. They should offer strong service-level agreements (SLAs), transparent metrics and even indemnities to demonstrate readiness for enterprise-scale liability. Partnering with insurers to bundle risk cover can further soothe conservative internal committees. Ultimately, competing on ‘safe hands’ rather than ‘flashy demos’ creates a defensive moat: governance-focused vendors win the multi-year, sticky contracts that provide the capital cushion to outlast the coming correction.

- Plan explicitly for consolidation, partnerships and clean exits.

Not every vendor will reach independent scale, and pretending otherwise creates non-viable independents that tie up talent and customer trust without a sustainable path forward. Smaller AI vendors should view mergers and acquisitions (M&A), strategic partnerships and ‘reverse acqui-hire’ deals as core system mechanics, and build to be acquirable by design, with clean IP, simple cap tables, defensible data assets and referenceable customers. In parallel, incumbents – hyperscalers, suites and systems integrators (SIs) – should treat this as a strategic sourcing channel, building repeatable playbooks for scouting, technical diligence and post-merger integration, so that they can absorb capabilities quickly when valuations reset. Joint reference projects, marketplace listings and co-sell motions create optionality for both sides before the runway shortens or acquisition windows close. Seen in system terms, consolidation is not just an endgame for the weak, but a mechanism for recycling talent and IP into stronger nodes, preserving innovation density after the bubble bursts.

Buyer playbook for a volatile AI cycle

The post-bubble environment does not just involve buyers. Enterprise decisions about what to fund, how to contract, which vendors to depend on and how to govern deployments feed directly back into the structure of the AI market. In a boom, many buyers behave as if AI is a cheap option on future capability – spreading pilots widely, accepting vendor-led terms and tolerating opaque economics. In a correction, that posture becomes a liability. The goal is not to retreat from AI, but to change the terms on which buyers participate in the system. Instead of amplifying narrative-driven growth, they can reroute capital towards a few flagship workflows, reshape contracts to share risk and reward, reduce structural dependency on single providers, and build the internal foundations and control layers that make AI deployments robust. Buyers should therefore:

- Run AI as a capital allocation portfolio and converge on a few flagship workflows.

Buyers should stop treating AI as a diffuse innovation theme and instead manage it as a staged investment portfolio with explicit gates: Explore → Prove → Scale → Retire. Every initiative must name a target workflow, accountable owner, measurable metric (cost, throughput, error rate, risk exposure) and payback window. Telemetry on usage, API calls, error rates and cloud spend should be used to classify projects into kill/fix/scale on a rolling basis. In parallel, leadership should deliberately converge activity on three to five flagship workflows where AI can realistically move P&L or enterprise risk, and declare everything else paused unless justified. This turns scattered experimentation into a small number of compounding assets that can survive budget pressure. Systemically, it replaces narrative-powered diffusion with value-driven reinforcement, as capital flows to what demonstrably works.

- Apply buyer-side leverage to reprice risk and reward in AI contracts.

As the market cools, buyers should use their renewed leverage to redesign commercial terms around risk-sharing, rather than vendor optimism. They should shift away from pure per-seat, per-token or ‘all you can experiment’ structures, towards hybrid contracts that combine a modest platform fee with outcome-linked meters: per resolved case, processed document, qualified lead or percentage of handle time reduced. For uncertain deployments, they can insist on phased commitments with explicit renegotiation rights tied to achieved metrics, instead of vague roadmap promises. Termination rights, step-down clauses and minimum-commit rebates should be built in, to limit downside if adoption lags. At the same time, buyers should allow for upside through gain-share and expansion bands, so that vendors are rewarded for genuinely increasing autonomy and productivity. Properly designed, contracts become a system stabilizer, in which buyers avoid underwriting bubble-era volumes, while still enabling credible partners to invest for shared success.

- De-risk concentration on single models, vendors and clouds.

Many buyers have quietly accumulated structural dependency on a small set of frontier labs and hyperscalers. That concentration is manageable during a boom – but dangerous in a correction. Buyers should architect critical workflows so that they can run across more than one model provider and, where feasible, more than one cloud. At the commercial layer, they should insist on portability rights, with usable data, prompt and configuration export, and clear rights around fine-tunes and derivatives. Periodically, they should run ‘substitution drills’ on paper: if this vendor were acquired, failed or repriced overnight, what would break and how fast could we move? In procurement, they should assess vendor balance sheets, burn multiples and ownership structure, alongside technical fit. The focus is not on spreading spend thinly, but on preserving credible outside options, so that buyers can renegotiate from a position of strength as post-bubble repricing and consolidation reshape the supply side.

- Build the real foundations: data quality, standardized processes and change capacity.

The bubble framed models as the scarce input; in practice, the binding constraints are fragmented data, undocumented processes and limited organizational capacity for change. Buyers should redirect a meaningful share of ‘AI budget’ to data engineering, lineage, access controls, master data management and fit-for-purpose labelling, where required. At the same time, target workflows must be standardized and observable, with clear steps, defined hand-offs, logged outcomes and known exception paths. Training and operating model changes must be funded, so that frontline teams understand what the systems are doing, when to override them, and how to improve them. This creates a stable substrate on which any model or vendor can operate. Systemically, it decouples enterprise AI value from any single provider’s ‘magic’, and allows buyers to harvest most of the benefit from cheaper, commoditized models, as the infrastructure stack deflates.

- Create an internal AI operations function to evaluate, govern and scale systems.

To survive a correction with durable capability, buyers need an internal control layer for AI –not just procurement and IT oversight. This does not require a research lab, but it does involve the creation of a small cross-functional AI operations (AIOps) group, spanning engineering, data, security, risk and the business. Its mandate is to run evaluation harnesses on real workloads, benchmark vendors, monitor the cost and performance of deployed systems, manage rollouts and rollbacks, and own the internal standards for logging, prompts, red-teaming and incident response. The team must be empowered to slow or stop deployments that fail quality or risk thresholds, irrespective of executive enthusiasm. In system terms, AIOps becomes the organization’s local feedback controller, damping external hype, catching emerging failure modes early, and ensuring that the enterprise AI portfolio reflects strategy and risk appetite, rather than external marketing cycles.

About the Authors

Henry Kirkman

Industry Analyst

Henry is an Industry Analyst at Verdantix. His current research agenda focuses on quality management, field service management and industrial applications of AI, including Gen...

View Profile

Chris Sayers

Senior Manager

Chris is a Senior Manager at Verdantix. His current research agenda targets enterprise AI integration and adoption, AI market trends and agentic AI. Chris joined Verdantix in ...

View Profile

_main-image.jpg?sfvrsn=59dbfeba_1)